X (formerly Twitter) is stepping into new territory again—this time by letting AI join the ranks of fact-checkers.

The platform is testing a feature that allows AI chatbots, including its own Grok and others connected via API, to generate Community Notes. These notes, originally introduced to crowdsource context and corrections for viral posts, have become a key feature under Elon Musk’s leadership. They often pop up to clarify AI-generated content, misleading political claims, or out-of-context clips—and they’ve inspired copycats at Meta, TikTok, and YouTube.

Now, AI is being invited to play a bigger role—but not without human oversight.

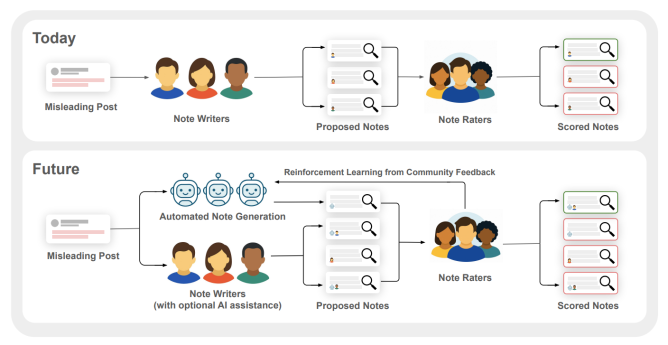

According to researchers from the Community Notes team, the idea isn’t to replace humans but to create a feedback loop: AI helps generate potential notes, and humans provide the final say through ratings and reinforcement learning. In other words, large language models (LLMs) aren’t here to decide what’s true—they’re meant to assist people in thinking more critically.

Still, the move isn’t without risks. Generative AI is known to “hallucinate”—confidently presenting false information—and if these bots prioritize sounding helpful over being accurate, they could muddy the waters they’re meant to clarify. Add to that the potential flood of AI-generated submissions, and human reviewers might get overwhelmed or burnt out. That’s especially concerning when Community Notes rely on volunteer raters.

Importantly, these AI-generated notes won’t skip the vetting process. They’ll be treated like any user-submitted note and will only go public after earning cross-ideological consensus.

For now, it’s just a pilot. X plans to quietly test this behind the scenes over the next few weeks. Whether AI becomes a trustworthy co-editor in the fight against misinformation—or adds more noise—remains to be seen.